介绍 kubernetes 集群不会为你处理数据的存储,我们可以为数据库挂载一个磁盘来确保数据的安全。

hostPath:把节点上的一个目录挂载到Pod,官方不推荐了,仅供单节点测试使用;不适用于多节点集群;

本地磁盘:可以挂载某个节点上的目录,但是这需要限定 pod 在这个节点上运行

云存储:不限定节点,不受集群影响,安全稳定;需要云服务商提供,裸机集群是没有的。

NFS:不限定节点,不受集群影响

hostPath 挂载 优缺点

把节点上的一个目录挂载到 Pod,但是已经不推荐使用了,文档

配置方式简单,需要手动指定 Pod 跑在某个固定的节点。

仅供单节点测试使用;不适用于多节点集群。

minikube 提供了 hostPath 存储,文档

示例

创建 yaml 文件

mongo.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 apiVersion: apps/v1 kind: StatefulSet metadata: name: mongodb spec: serviceName: mongodb replicas: 1 selector: matchLabels: app: mongodb template: metadata: labels: app: mongodb spec: containers: - name: mongo image: mongo:4.4 imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /data/db name: mongo-data volumes: - name: mongo-data hostPath: path: /data/mongo-data type: DirectoryOrCreate --- apiVersion: v1 kind: Service metadata: name: mongodb spec: selector: app: mongodb type: ClusterIP clusterIP: None ports: - port: 27017 protocol: TCP targetPort: 27017

部署 yaml

1 2 3 4 5 6 7 $ kubectl apply -f mongo.yaml statefulset.apps/mongodb created service/mongodb created $ kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mongodb-0 1/1 Running 0 14s 172.17.0.2 minikube <none> <none>

查看目录是否挂载成功,即 看节点上是否有目录 /data/mogo-data

1 2 3 4 5 $ docker exec -it minikube bash root@minikube:/$ cd /data/mongo-data/ root@minikube:/data/mongo-data% ls WiredTiger WiredTiger.turtle WiredTigerHS.wt collection-0-4204941809035389281.wt collection-4-4204941809035389281.wt index-1-4204941809035389281.wt index-5-4204941809035389281.wt journal sizeStorer.wt WiredTiger.lock WiredTiger.wt _mdb_catalog.wt collection-2-4204941809035389281.wt diagnostic.data index-3-4204941809035389281.wt index-6-4204941809035389281.wt mongod.lock storage.bson

更高级的抽象

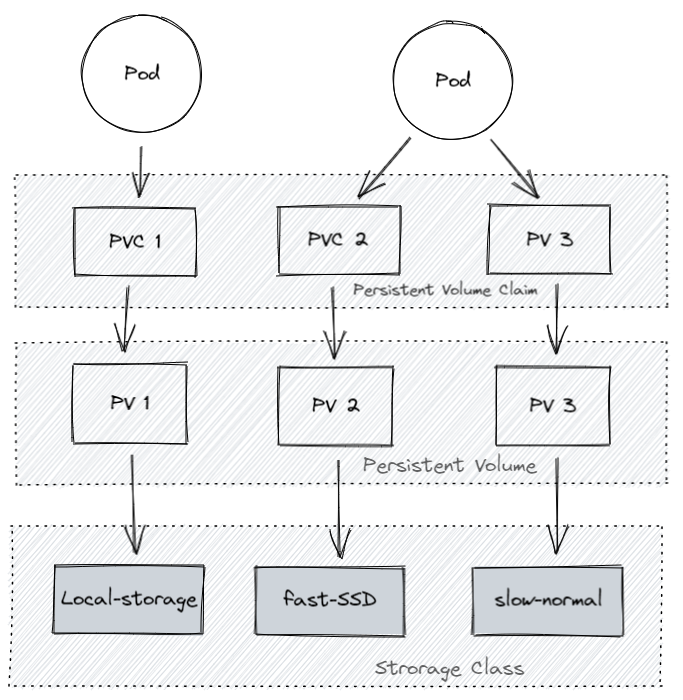

Storage Class (SC) 将存储卷划分为不同的种类,例如:SSD,普通磁盘,本地磁盘,按需使用。文档

sc.yaml 1 2 3 4 5 6 7 8 9 apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: slow provisioner: kubernetes.io/aws-ebs parameters: type: io1 iopsPerGB: "10" fsType: ext4

Persistent Volume (PV) 描述卷的具体信息,例如磁盘大小,访问模式 。文档 ,类型 ,Local 示例

pv.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 apiVersion: v1 kind: PersistentVolume metadata: name: mongodata spec: capacity: storage: 2Gi volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Delete storageClassName: local-storage local: path: /root/data nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - node2

Persistent Volume Claim (PVC) 对存储需求的一个申明,可以理解为一个申请单,系统根据这个申请单去找一个合适的 PV

pvc.yaml 1 2 3 4 5 6 7 8 9 10 apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mongodata spec: accessModes: ["ReadWriteOnce" ] storageClassName: "local-storage" resources: requests: storage: 2Gi

为什么要这么多层抽象

更好的分工,运维人员负责提供好存储,开发人员不需要关注磁盘细节,只需要写一个申请单。

方便云服务商提供不同类型的,配置细节不需要开发者关注,只需要一个申请单。

动态创建 ,开发人员写好申请单后,供应商可以根据需求自动创建所需存储卷。

腾讯云示例

本地磁盘示例 不支持动态创建,需要提前创建好

配置文件也可以分开写,也可以写在一起,用 ---隔开

mongo.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 apiVersion: apps/v1 kind: StatefulSet metadata: name: mongodb spec: serviceName: mongodb replicas: 1 selector: matchLabels: app: mongodb template: metadata: labels: app: mongodb spec: containers: - name: mongo image: mongo:4.4 imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /data/db name: mongo-data volumes: - name: mongo-data persistentVolumeClaim: claimName: mongodata --- apiVersion: v1 kind: Service metadata: name: mongodb spec: clusterIP: None ports: - port: 27017 protocol: TCP targetPort: 27017 selector: app: mongodb type: ClusterIP --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer --- apiVersion: v1 kind: PersistentVolume metadata: name: mongodata spec: capacity: storage: 2Gi volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Delete storageClassName: local-storage local: path: /root/data nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - minikube --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mongodata spec: accessModes: ["ReadWriteOnce" ] storageClassName: "local-storage" resources: requests: storage: 2Gi

部署 yaml :

因为我们挂载目录到 /root/data ,所以先要提前创建好该目录

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 $ mkdir -p /root/data $ kubectl apply -f mongo.yaml statefulset.apps/mongodb created service/mongodb created storageclass.storage.k8s.io/local-storage created persistentvolume/mongodata created persistentvolumeclaim/mongodata created $ kubectl get all NAME READY STATUS RESTARTS AGE pod/mongodb-0 1/1 Running 0 15s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d2h service/mongodb ClusterIP None <none> 27017/TCP 15s NAME READY AGE statefulset.apps/mongodb 1/1 16s $ kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE local-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 3m52s standard (default) k8s.io/minikube-hostpath Delete Immediate false 5d2h $ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE mongodata 2Gi RWO Delete Bound default/mongodata local-storage 3m55s $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mongodata Bound mongodata 2Gi RWO local-storage 3m58s $ docker exec -it minikube bash root@minikube:/$ cd /root/data root@minikube:~/data$ ls WiredTiger WiredTiger.turtle WiredTigerHS.wt collection-0--1433628362673469070.wt collection-4--1433628362673469070.wt index-1--1433628362673469070.wt index-5--1433628362673469070.wt journal sizeStorer.wt WiredTiger.lock WiredTiger.wt _mdb_catalog.wt collection-2--1433628362673469070.wt diagnostic.data index-3--1433628362673469070.wt index-6--1433628362673469070.wt mongod.lock storage.bson